What Is A Quicksort Algorithm?

The Quicksort algorithm is a useful way to sort through data, available in a number of languages, such as C++ and Java. For many coders, quicksort is indispensable, and almost every programming forum cites quicksort as one of the most efficient sorting algorithms available.

But what exactly is the Quicksort algorithm and how does it work?

Recursion: Key to Understanding the Quicksort Algorithm

Quicksort is relatively simple, but a user needs to understand two key concepts in order to comprehend the algorithm as a while. The first concept is recursion, which is used in nearly all dynamic programming languages. Simply, recursion allows a computer program to perform the same task over and over again with different elements or variables.

For example, in JavaScript (which is a dynamic language), the computer program can check to see if an array element is higher or lower than five (for example). Then the computer program can be set to recursively check each element in the array (which is essentially a list), and report whether that array element is smaller or larger than five. This technique is more useful than having the user type every item in the array and sorting through them that way. As usual, Geeks for Geeks has a thorough explanation of quicksort.

The Divide-and-Conquer Strategy Explained

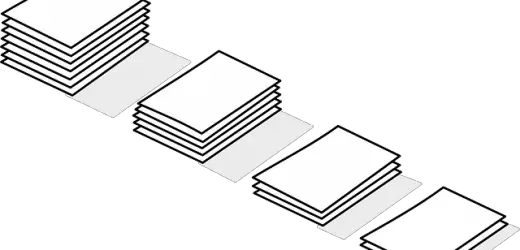

What happens though, if the list is large, containing hundreds and hundreds of entries? Even with recursion, the process will take too long if the computer program can only check one number at a time. That is where the “divide-and-conquer” strategy comes into play. When divide-and-conquer is used by a sorting algorithm, one array element is chosen as a “pivot”. This pivot is then used to break the array up into multiple sections, otherwise known as partitions. Once these partitions are created, then the computer program begins using recursion on each section of the program simultaneously.

An Example of Divide-and-Conquer

An example of this concept in action is as follows:

An array is created with the numbers 3, 6 ,2, 25, 12, 90 and 47, and the algorithm is tasked with sorting these elements in numerical order and returning a completed list. The program designates the data element “25”as the pivot, creating two partitions. The first partition consists of the array elements “3, 6, and 2”. The second partition consists of the array elements “12, 90 and 47”.

The number “25” essentially is placed in the middle. Another way to explain is that the number “25” is untouched by the algorithm. Then, the algorithm simultaneously begins sorting both partitions, with the results being “2, 3, 6, and 12” in the first partition, while the second partition would be “47 and 90”. Meanwhile, the pivot (or 25) would be in the middle.

The results are then combined into one large array, which would appear as “2, 3, 6, 12, 25, 47, and 90”. While this example is concerned with numbers and arrays, due to their association with quicksort, this strategy is not limited to numbers or even sorting. Any large task that could feasibly be divided into a number of small sections can be processed using divide-and-conquer. Divide-and-conquer is not limited to quicksort and many sorting algorithms take advantage of this technique.

One notable feature of Quicksort is that no action is taken in the combine step, unlike certain other algorithms such as mergesort. All of the work of sorting is done in the divide-and-conquer steps, and so the combine step simply puts the list back together. While perhaps not useful for the programmer to know, it is an interesting bit of trivia to learn, and also allows a skilled coder to tweak quicksort for best performance.

There are many reasons to use divide-and conquer, but one obvious gain is if the computer program has access to multiple processors. Each partition can be assigned to a different processor and so many performance bottlenecks can be avoided. More information on quicksort and how it works can be found here.

Why Use Quicksort?

While knowing the concepts behind quicksort is useful, it doesn't explain why we should use Quicksort in the first place, considering other algorithms, such as mergesort, appear to be just as effective on paper.

This is a case where simply looking at formulas or statistics may be misleading. While the worst-case running time of quicksort is as slow and inefficient as selection sort's or insertion sort's running time, the average-case running time is as good as merge-sort's running time, which is O(n² ). Already this running time is quick, and would make for a good reason to use quicksort.

What makes quicksort so useful is that the constant factor represented by the O factor actually is quite good, generally enabling quicksort to match or even be faster than mergesort for real world applications, if the coder is careful in ensuring the optimal conditions for quicksort to operate with optimal efficiency.

Being Smart In Using Quicksort

How do we ensure that quicksort is working at its optimal speed? The answer lies in the partitions. If the programmer is careful in choosing the pivot, and both partitions are of equal size, then the algorithm is much faster at sorting than if the partitions are of unequal size. Read more about it in this article.

If the partitions are of unequal size, the speed advantage inherent to quicksort is lost and the logarithm may be even slower than a stable sort such as mergesort. Careful coding is necessary to preserve quicksort's speed advantage.

For almost any type of sorting that doesn't need to be stable, Quicksort probably would be the first and strongest choice of the veteran programmer. What is stable sorting? Well, that's another question and one that should be answered if the user is to truly understand the capabilities of Quicksort.

Stable and Unstable Sorts Explained

A stable sort essentially tries to keep the order of the original list to be sorted when possible. For example, let's just say a stable sort algorithm, such as Mergesort, had the following array elements to sort alphabetically by first letter:

stable |

space |

arrow |

zebra |

A stable sort algorithm would return the following result:

arrow |

stable |

space |

zebra |

In other words, the algorithm would respect the original order of the entries “stable” and “space” and not alter the order. This is an example of a stable algorithm.

Meanwhile an unstable sort may or may not respect the original order, so the return could either be:

arrow |

space |

stable |

zebra |

or it could be:

arrow |

stable |

space |

zebra |

Please note that neither return is incorrect. If what the programmer requested was to create an alphabetical list by the first letter of the word, both returns are legitimate. However, if the user needs to respect the original order of the array or list when appropriate, then this feature of Quicksort could be seen as a drawback.

However, if the user decides to use a stable sort, certain advantages inherent to unstable sorts are lost. Unstable sorts tend to use much less memory than stable sorts, while still being just as fast. Even better, an unstable sort will generally be easier to code than a stable sort.

Quicksort: A Flexible and Useful Algorithm

But suppose you need a sorting algorithm that is more stable than classic quicksort, but still retains quicksort's speed? Well, that's fairly simple. The quicksort algorithm can be coded to exhibit the same traits as a stable sort algorithm. While this solution does require some skill in coding, the rewards are obvious. When the stability of a stable sort and the memory use and speed of Quicksort are combined, the results can undeniably be effective.

With careful planning and proper coding, quicksort is an undeniably effective sorting algorithm for C++ and Java users. Considering its relative ease of coding and hefty speed advantage, there should be no question as to why quicksort is so popular. As long as the coder understands that the algorithm produces unstable sorts and the partitions must be roughly equivalent, quicksort is a powerful tool. Every coder in need of a sorting algorithm should try it today.

.

What do you think?